September 12, 2023 | A new trend called “jailbreaking” has emerged in the world of AI chatbots, where users exploit vulnerabilities to bypass safety measures, potentially violating ethical guidelines and cybersecurity protocols. This practice allows users to unleash uncensored and unregulated content, raising ethical concerns. Online communities share tactics to achieve these jailbreaks, fostering a culture of experimentation. Cyber-criminals have also developed tools for malicious purposes, leveraging custom large language models. While defensive security teams work on securing language models, the field is still in its early stages, and organizations are taking proactive steps to enhance chatbot security.

![]()

Transform Vulnerability Management: How Critical Start & Qualys Reduce Cyber Risk

In a recent webinar co-hosted by Qualys and Critical Start, experts from both organizations discusse...![]()

H2 2024 Cyber Threat Intelligence Report: Key Takeaways for Security Leaders

In a recent Critical Start webinar, cyber threat intelligence experts shared key findings from the H...![]()

Bridging the Cybersecurity Skills Gap with Critical Start’s MDR Expertise

During a recent webinar hosted by CyberEdge, Steven Rosenthal, Director of Product Management at Cri...![]()

2024: The Cybersecurity Year in Review

A CISO’s Perspective on the Evolving Threat Landscape and Strategic Response Introduction 2024 has...![]()

Modern MDR That Adapts to Your Needs: Tailored, Flexible Security for Today’s Threats

Every organization faces unique challenges in today’s dynamic threat landscape. Whether you’re m...![]()

Achieving Cyber Resilience with Integrated Threat Exposure Management

Welcome to the third and final installment of our three-part series Driving Cyber Resilience with Hu...Why Remote Containment and Active Response Are Non-Negotiables in MDR

You Don’t Have to Settle for MDR That Sucks Welcome to the second installment of our three-part bl...![]()

Choosing the Right MDR Solution: The Key to Peace of Mind and Operational Continuity

Imagine this: an attacker breaches your network, and while traditional defenses scramble to catch up...![]()

Redefining Cybersecurity Operations: How New Cyber Operations Risk & Response™ (CORR) platform Features Deliver Unmatched Efficiency and Risk Mitigation

The latest Cyber Operations Risk & Response™ (CORR) platform release introduces groundbreaking...![]()

The Rising Importance of Human Expertise in Cybersecurity

Welcome to Part 1 of our three-part series, Driving Cyber Resilience with Human-Driven MDR: Insights...![]()

Achieving True Protection with Complete Signal Coverage

Cybersecurity professionals know all too well that visibility into potential threats is no longer a ...![]()

Beyond Traditional MDR: Why Modern Organizations Need Advanced Threat Detection

You Don’t Have to Settle for MDR That Sucks Frustrated with the conventional security measures pro...The Power of Human-Driven Cybersecurity: Why Automation Alone Isn’t Enough

Cyber threats are increasingly sophisticated, and bad actors are attacking organizations with greate...Importance of SOC Signal Assurance in MDR Solutions

In the dynamic and increasingly complex field of cybersecurity, ensuring the efficiency and effectiv...The Hidden Risks: Unmonitored Assets and Their Impact on MDR Effectiveness

In the realm of cybersecurity, the effectiveness of Managed Detection and Response (MDR) services hi...![]()

The Need for Symbiotic Cybersecurity Strategies | Part 2: Integrating Proactive Security Intelligence into MDR

In Part 1 of this series, The Need for Symbiotic Cybersecurity Strategies, we explored the critical ...Finding the Right Candidate for Digital Forensics and Incident Response: What to Ask and Why During an Interview

So, you’re looking to add a digital forensics and incident response (DFIR) expert to your team. Gr...![]()

The Need for Symbiotic Cybersecurity Strategies | Part I

Since the 1980s, Detect and Respond cybersecurity solutions have evolved in response to emerging cyb...![]()

Critical Start H1 2024 Cyber Threat Intelligence Report

Critical Start is thrilled to announce the release of the Critical Start H1 2024 Cyber Threat Intell...![]()

Now Available! Critical Start Vulnerability Prioritization – Your Answer to Preemptive Cyber Defense.

Organizations understand that effective vulnerability management is critical to reducing their cyber...![]()

Recruiter phishing leads to more_eggs infection

With additional investigative and analytical contributions by Kevin Olson, Principal Security Analys...![]()

2024 Critical Start Cyber Risk Landscape Peer Report Now Available

We are excited to announce the release of the 2024 Critical Start Cyber Risk Landscape Peer Report, ...Critical Start Managed XDR Webinar — Increase Threat Protection, Reduce Risk, and Optimize Operational Costs

Did you miss our recent webinar, Stop Drowning in Logs: How Tailored Log Management and Premier Thre...Pulling the Unified Audit Log

During a Business Email Compromise (BEC) investigation, one of the most valuable logs is the Unified...![]()

Set Your Organization Up for Risk Reduction with the Critical Start Vulnerability Management Service

With cyber threats and vulnerabilities constantly evolving, it’s essential that organizations take...![]()

Announcing the Latest Cyber Threat Intelligence Report: Unveiling the New FakeBat Variant

Critical Start announces the release of its latest Cyber Threat Intelligence Report, focusing on a f...Cyber Risk Registers, Risk Dashboards, and Risk Lifecycle Management for Improved Risk Reduction

Just one of the daunting tasks Chief Information Security Officers (CISOs) face is identifying, trac...![]()

Beyond SIEM: Elevate Your Threat Protection with a Seamless User Experience

Unraveling Cybersecurity Challenges In our recent webinar, Beyond SIEM: Elevating Threat Prote...![]()

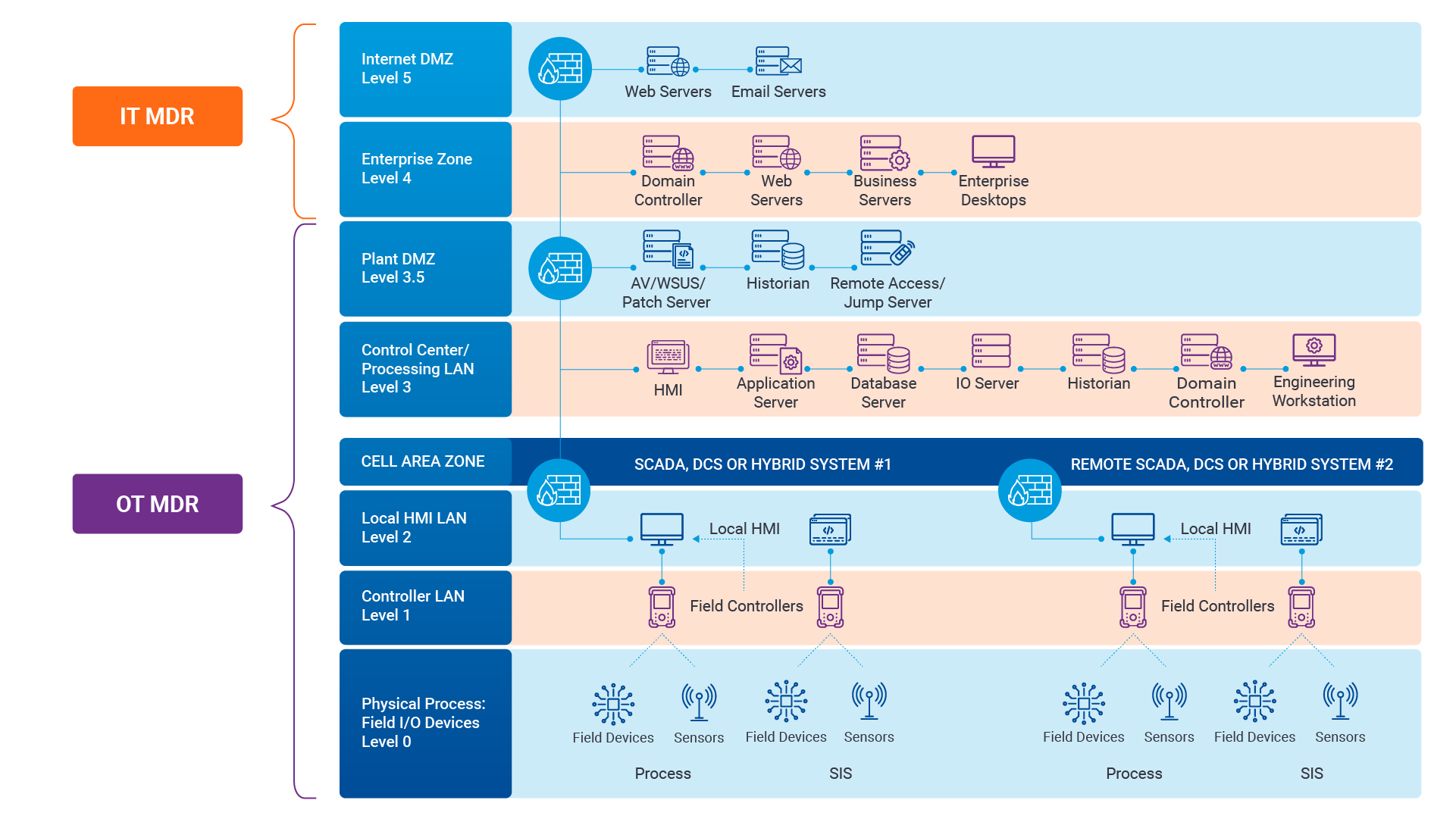

Navigating the Convergence of IT and OT Security to Monitor and Prevent Cyberattacks in Industrial Environments

The blog Mitigating Industry 4.0 Cyber Risks discussed how the continual digitization of the manufac...![]()

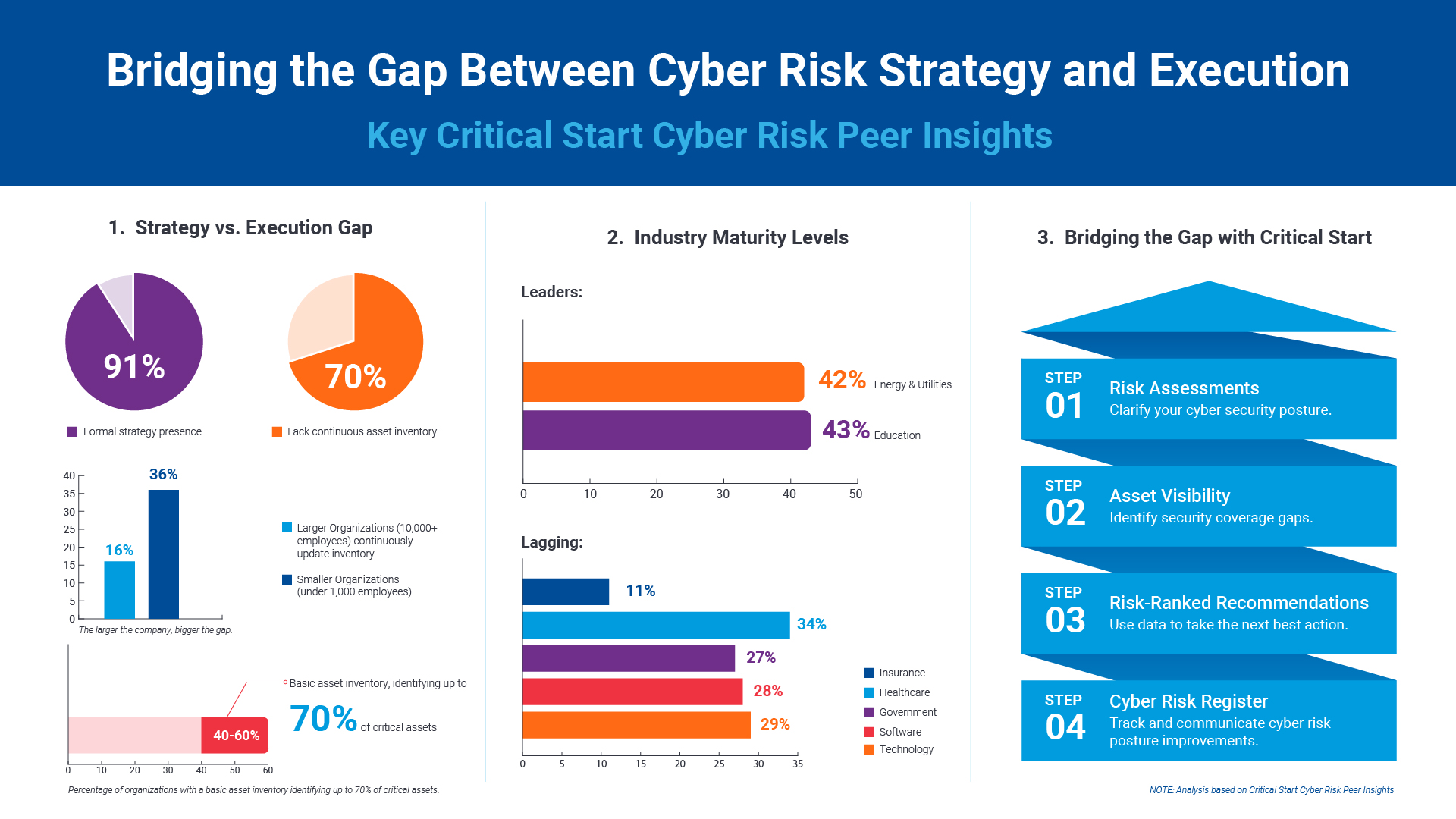

Critical Start Cyber Risk Peer Insights – Strategy vs. Execution

Effective cyber risk management is more crucial than ever for organizations across all industries. C...![]() Press Release

Press ReleaseCritical Start Named a Major Player in IDC MarketScape for Emerging Managed Detection and Response Services 2024

Critical Start is proud to be recognized as a Major Player in the IDC MarketScape: Worldwide Emergin...Introducing Free Quick Start Cyber Risk Assessments with Peer Benchmark Data

We asked industry leaders to name some of their biggest struggles around cyber risk, and they answer...Efficient Incident Response: Extracting and Analyzing Veeam .vbk Files for Forensic Analysis

Introduction Incident response requires a forensic analysis of available evidence from hosts and oth...![]()

Mitigating Industry 4.0 Cyber Risks

As the manufacturing industry progresses through the stages of the Fourth Industrial Revolution, fro...![]()

CISO Perspective with George Jones: Building a Resilient Vulnerability Management Program

In the evolving landscape of cybersecurity, the significance of vulnerability management cannot be o...![]()

Navigating the Cyber World: Understanding Risks, Vulnerabilities, and Threats

Cyber risks, cyber threats, and cyber vulnerabilities are closely related concepts, but each plays a...The Next Evolution in Cybersecurity — Combining Proactive and Reactive Controls for Superior Risk Management

Evolve Your Cybersecurity Program to a balanced approach that prioritizes both Reactive and Proactiv...![]()

CISO Perspective with George Jones: The Top 10 Metrics for Evaluating Asset Visibility Programs

Organizations face a multitude of threats ranging from sophisticated cyberattacks to regulatory comp...![]()

5 Signs Your MDR Isn’t Working — and What to Do About It

Are you confident your MDR is actually reducing risk? If so, how confident? According to recent indu...![]() Datasheet

DatasheetSecurity Services for SIEM

Critical Start’s Security Services for SIEM combines Managed SIEM and MDR for SIEM to deliver ...![]()

Building a Future-Proof Security Stack with Flexible MDR

Technology evolves. Organizations grow. But is your cybersecurity strategy keeping up? Come on; be h...

Newsletter Signup

Stay up-to-date on the latest resources and news from CRITICALSTART.

Thanks for signing up!